How Does AlphaZero Play Chess?

By now you've heard about the new kid on the chess-engine block, AlphaZero, and its crushing match win vs Stockfish, the strongest open-source chess engine.

The reactions from the chess community to this match ranged from admiration to utter disbelief.

But how does AlphaZero actually work?

How is it different from other engines and why is it so much better? In this two-part article I’ll try to explain a bit of what goes on under AlphaZero’s hood.

First, let’s reflect on what happened. AlphaZero was developed by DeepMind (a Google-owned company) to specialize in learning how to play two-player, alternate-move games. It was primed with the rules of chess, and nothing else.

It then started learning chess by playing games against itself. Game one would have involved totally random moves. At the end of this game, AlphaZero had learned that the losing side had done stuff that wasn’t all that smart, and that the winning side had played better. AlphaZero had taught itself its first chess lesson. The quality of chess in game two was a just a tiny bit better than the first.

Nine hours and 44 million games of split-personality chess later, AlphaZero had (very possibly) taught itself enough to become the greatest chess player, silicon- or carbon-based, of all time.

How on earth did it do it?

Google headquarters in London from inside, with the DeepMind section on the eighth floor. | Photo: Maria Emelianova/Chess.com.

It didn’t calculate more variations than Stockfish.

Quite the opposite in fact: Stockfish examined 70 million positions per second while AlphaZero contented itself with about 0.1 percent of that: 80,000 per second. This brings to mind a remark made by Jonathan Rowson after Michael Adams crushed him in a match in 1998: “I was amazed at how little he saw.”

Stronger players tend to calculate fewer variations than weaker ones. Instead their highly-honed intuition guides them to focus their calculation on the most relevant lines. This is exactly what AlphaZero did. It taught itself chess in quite a human-like way, developing an “intuition” like no other chess machine has ever done, and it combined this with an amount of cold calculation.

Let’s see how it did that.

IM Danny Rensch explains the AlphaZero match in a series of videos on Twitch.

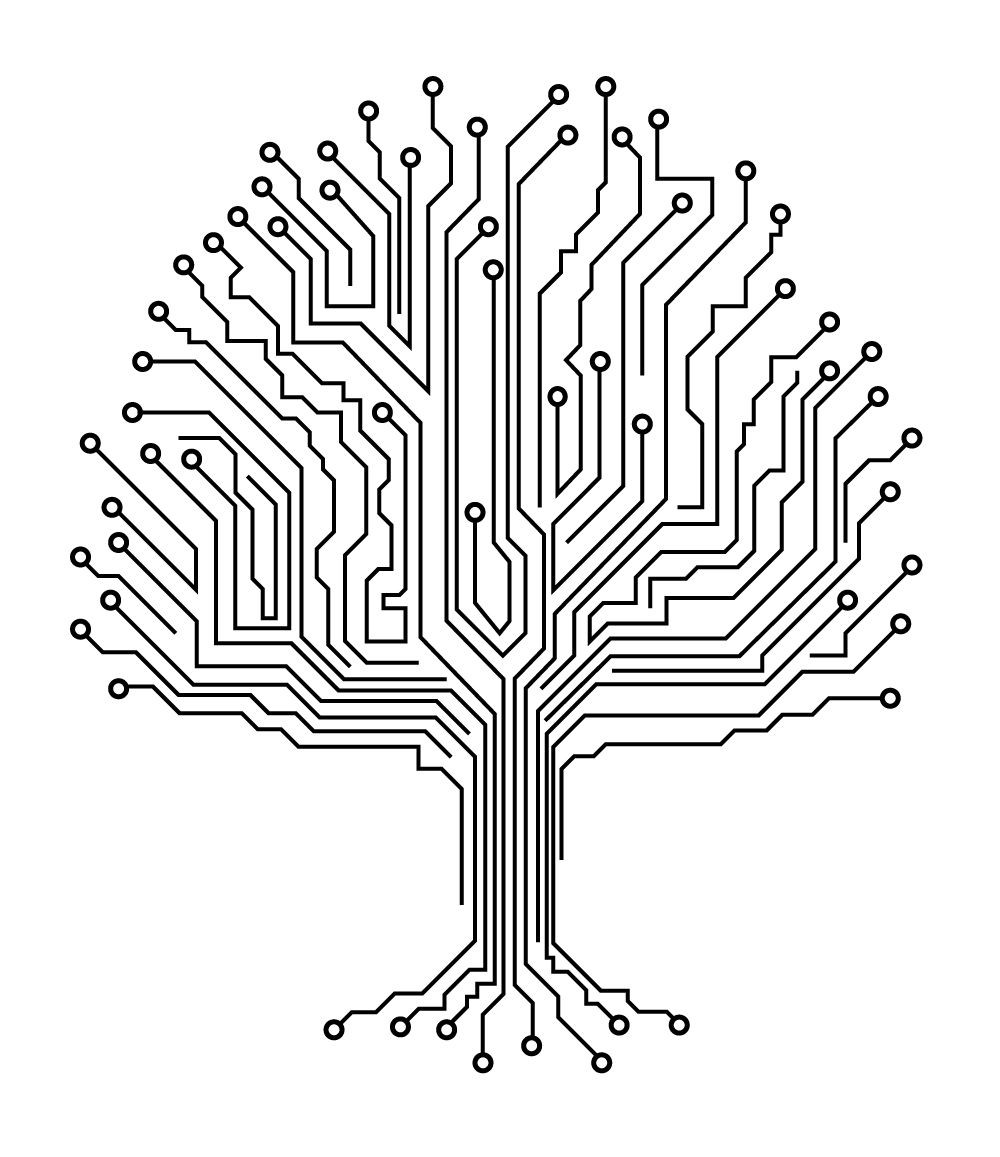

The Analysis Tree

Chess engines use a tree-like structure to calculate variations, and use an evaluation function to assign the position at the end of a variation a value like +1.5 (White’s advantage is worth a pawn and a half) or -9.0 (Black’s advantage is worth a queen). AlphaZero’s approach to both calculating variations and evaluating positions is radically different to what other engines do.

All popular chess engines are based on the minimax algorithm, which is a fancy name that simply means you pick the move that gives you the biggest advantage regardless of what the opponent plays. Minimax is invariably enhanced with alpha-beta pruning, which is used to reduce the size of the tree of variations to be examined. Here’s an extreme example of how this pruning works: Say an engine is considering a move and sees its opponent has 20 feasible replies. One of those replies leads to a forced checkmate. Then the engine can abandon (or “cutoff”) the move it was considering, no matter how well it would stand after any of the other 19 replies.

Another issue is that if an engine prunes away moves that only seem bad, e.g. those that lose material, it will fail to consider any kind of sacrifice, which is partly why early engines were so materialistic. In current engines like Stockfish, alpha-beta pruning is combined with a range of other chess-specific enhancements such the killer-move heuristic (a strong move in another similar variation is likely to be strong here), counter-move heuristic (some moves have natural responses regardless of position — I bet you’ve often met axb5 with axb5, right?) and many others.

AlphaZero, in contrast, uses Monte Carlo Tree Search, or MCTS for short. Monte Carlo is famous for its casinos, so when you see this term in a computing context it means there’s something random going on. An engine using pure MCTS would evaluate a position by generating a number of move sequences (called “playouts”) from that position randomly, and averaging the final scores (win/draw/loss) that they yield. This approach may seem altogether too simple, but if you think about it you’ll realize it’s actually quite a plausible way of evaluating a position.

The Monte Carlo Casino.

AlphaZero creates a number of playouts on each move (800 during its training). It also augments pure MCTS by preferring moves that it has not tried (much) already, that seem probable and that seem to lead to “good” positions, where “good” means that the evaluation function (more on this next article) gives them a high value. It’s really creating semi-random playouts, lines that seem appropriate to its ever-improving evaluation function. Isn’t this quite like how you calculate? By focussing on plausible lines of play?

Notice that so far there’s absolutely nothing chess-specific in what AlphaZero is doing. In my next article, when we look at how AlphaZero learns to evaluate chess positions, we’ll see there’s absolutely nothing chess-specific there either!

Like a newborn baby, AlphaZero came into the world with little knowledge, but is massively geared to learn. One weakness of MCTS is that since it’s based on creating semi-random playouts, it can get it completely wrong in tense positions where there is one precise line of optimal play. If it doesn’t randomly select this line, it is likely to blunder. This blindness was probably what caused AlphaZero’s Go playing predecessor, AlphaGo, to lose a game to 18-time world Go champion Lee Sedol. It seems not to have been an issue in the match with Stockfish, however.

MCTS has been used previously for two-player gameplay, but was found to perform much worse than the well-established minimax plus alpha-beta approach. In AlphaZero, MCTS combines really well with the employed neural network-based evaluation function.

In my next article, I’ll explain more about this neural network and especially the fascinating way it learns, on its own, how to evaluate chess positions. I’ll also describe the hardware AlphaZero runs on, and make some predictions about how all this will impact chess as we know it.

What do you think about how AlphaZero plays chess? Let us know in the comments.

Corrections: AlphaZero creates a number of playouts on each move, not 800. That was during training.